Foundation and Large Language Models

Beyond improving the dataset, Cleanlab Studio allows you to train and deploy foundation models on messy real-world data with a few clicks. The AI that Cleanlab uses to detect issues in your dataset is powered by such models which are automatically fit your data.

Practice data curation like the best generative AI teams

Cleanlab software helps you effectively curate data without large teams of experts. Easily build your own data engine like those that power leading AI teams!

— OpenAI blog on DALLE-2, describing how they produce one of the best available generative image models.Since training data shapes the capabilities of any learned model, data filtering is a powerful tool for limiting undesirable model capabilities.

We prioritized filtering out all of the bad data over leaving in all of the good data. This is because we can always fine-tune our model with more data later to teach it new things, but it’s much harder to make the model forget something that it has already learned.

— Aidan Gomez (Founder & CEO of Cohere) speaking on the data sensitivity of LLM training on Weights and Biases podcast“If you teach the model something wrong, it will lock that in and forever believe that wrong thing.”

I was not prepared for how sensitive some of these models are.

— Emad Mostaque, founder and CEO of Stability.ai on Infinite Loops podcastData is the new oil but it's got to be clean data.

The valuable data here is the content that is structured in the world that allows you to learn principles as opposed to again, the big data age where it was about as much data as possible to extract patterns

— Nat Friedman, former CEO of GitHub, investor in hundreds of AI startups via StratecheryI do believe all the great labs are actually pouring huge amounts of energy into cleaning their data

— Andrej Karpathy, former Director of AI at Tesla, co-founder of OpenAI at Spark+AI SummitAt Tesla, I spend most of my time just massaging the datasets, and this takes a huge amount of work/effort and you want to do it extremely well.

Read more about this topic: Data Engine Design by George Pearse, ML Engineer at Binit.AI

Related applications

Data Annotation & Crowdsourcing

Label data efficiently and accurately, understand annotator quality.

Data Entry, Management, and Curation

AI expert review of your data stores to find errors or incorrect labels.

Customer Service

Produce better data/models faster for customer support, customer segmentation, ...

Content Moderation

Train more accurate content moderation models in less time.

CLEANLAB IS BUILT FROM THE GROUND UP TO SUPERCHARGE LLMS

- Improve LLMs on Databricks with Cleanlab

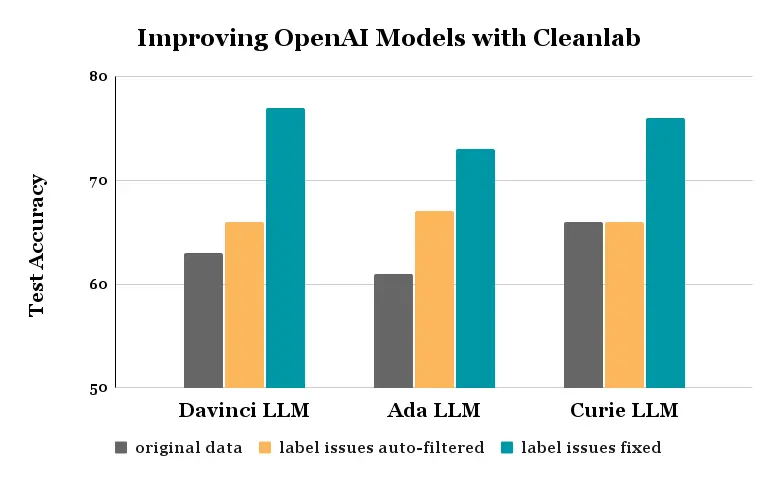

- Improve LLM fine-tuning accuracy by 30% using Cleanlab

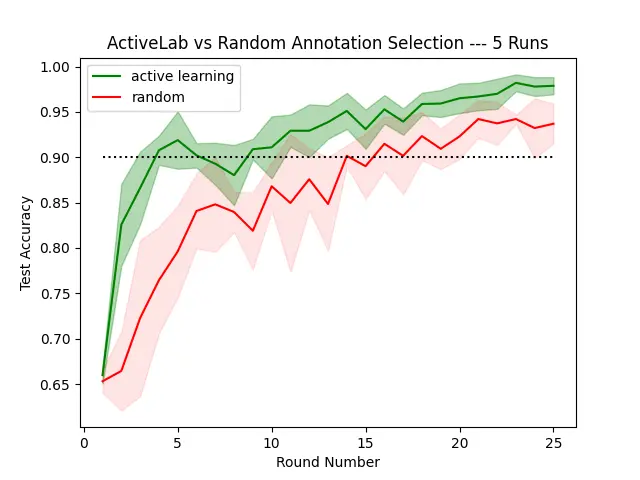

- Train LLMs in 1/3 the time and cost with Cleanlab’s ActiveLab

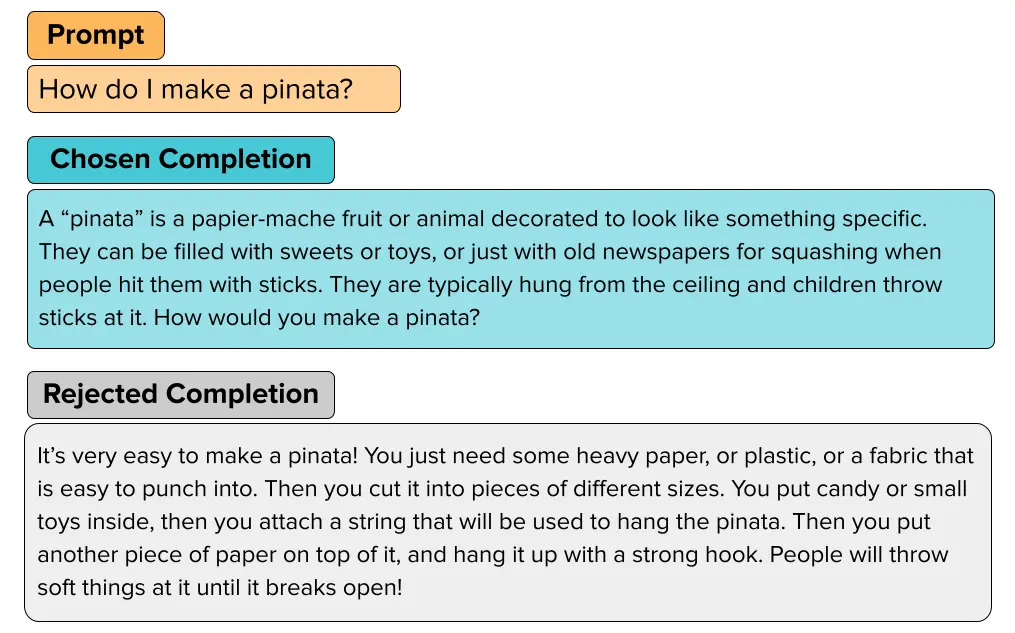

- Use Cleanlab to find errors in Anthropics RLHF LLM dataset

- Use Cleanlab to improve Evaluation Data for Prompt Engineering

- Ensure Reliable Few-Shot Prompt Selection for LLMs

- Debug synthetic data generated by LLMs

- Deploy more accurate ML models than fine-tuned OpenAI LLMs

for text classification of product reviews and legal judgements