Data Annotation & Crowdsourcing

Case StudyGoogle

Cleanlab is well-designed, scalable and theoretically grounded: it accurately finds data errors, even on well-known and established datasets.

Cleanlab is now one of my go-to libraries for dataset cleanup.

Case StudyBanco Bilbao Vizcaya Argentaria (BBVA)

[We used Cleanlab in] an update of one of the functionalities offered by the BBVA app: the categorization of financial transactions. These categories allow users to group their transactions to better control their income and expenses, and to understand the overall evolution of their finances. This service is available to all users in Spain, Mexico, Peru, Colombia, and Argentina.

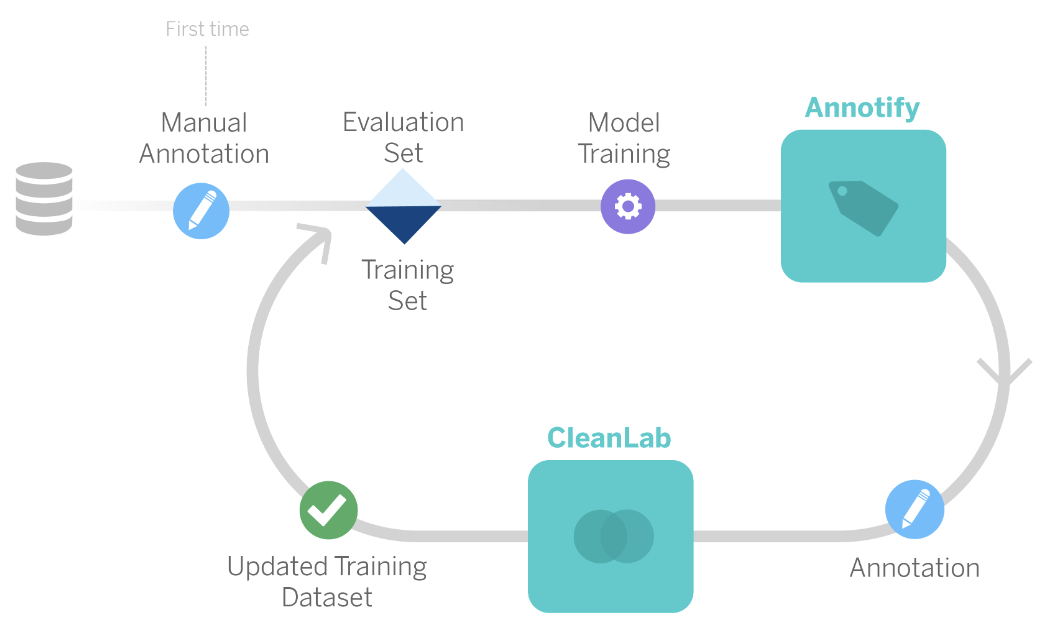

We used AL [Active Learning] in combination with Cleanlab.

This was necessary because, although we had defined and unified annotation criteria for transactions, some could be linked to several subcategories depending on the annotator’s interpretation. To reduce the impact of having different subcategories for similar transactions, we used Cleanlab for discrepancy detection.

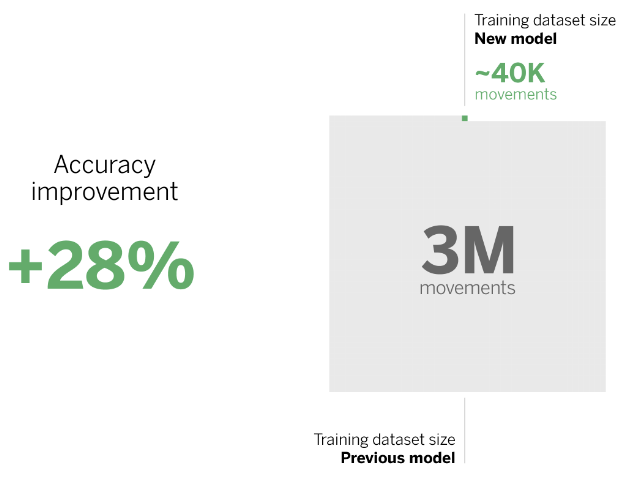

With the current model, we were able to improve accuracy by 28%, while reducing the number of labeled transactions required to train the model by more than 98%.

CleanLab assimilates input from annotators and corrects any discrepancies between similar samples.

CleanLab helped us reduce the uncertainty of noise in the tags. This process enabled us to train the model, update the training set, and optimize its performance. The goal was to reduce the number of labeled transactions and make the model more efficient, requiring less time and dedication. This allows data scientists to focus on tasks that generate greater value for customers and organizations.

Case StudyGavagai

At Gavagai, we rely on labeled data to train our models, both publicly available datasets and data we have annotated ourselves. We know that the quality of the data is paramount when it comes to creating machine learning models that can produce business value for our customers.

Cleanlab Studio is a very effective solution to calm my nerves when it comes to label noise!

The tool allows me to upload a dataset and obtain a ranked list of all the potential label issues in the data in just a few clicks. The label issues can then be assessed and fixed right away in the GUI.

Cleanlab should be a go-to tool in every ML practitioners toolbox!

Cleanlab is used for automated quality assurance at leading data annotation companies

· Scale blog: Peer-Reviewed Doesn’t Mean Perfect Data

· Argilla tutorial: Find label errors with cleanlab

· Datagen blog: The Danger of Hand Labeling Your Data

HOW CLEANLAB CAN HELP YOUR DATA ANNOTATION TEAM

Use our CrowdLab system to analyze data labeled by multiple annotators and estimate:

- A consensus label for each instance that aggregates the individual annotations.

- A quality score for each consensus label to gauge confidence that it is the correct choice.

- A quality score for each annotator to quantify the overall correctness of their labels.

Use our ActiveLab system (active learning with relabeling) to efficiently collect new data labels for training accurate models.

- Obtain reliable data labels even with (multiple) imperfect annotators.

- Only ask for labels that will significantly improve your model.

Compare quality of different data providers and data sources. Listen to a discussion on this topic in the Weights & Biases podcast.

Use least expensive data provider to first obtain noisy labels, and then ask more expensive provider (or in-house experts) to review select examples flagged by Cleanlab.

AI algorithms invented by Cleanlab scientists provide automated quality assurance for data annotation teams working across diverse applications (speech transcription, autonomous vehicles, industrial quality control, image segmentation, object detection, intent recognition, entity recognition, content moderation, document intelligence, LLM evaluation and RLHF, ...)

Read about active learning to efficiently annotate image data.

Read about analyzing multi-annotator text data with CrowdLab.

Read about efficiently annotating text data for Transformers with active learning.

Related applications

Foundation and Large Language Models

Boost fine-tuning accuracy and reduce time spent

Data Entry, Management, and Curation

AI expert review of your data stores to find errors or incorrect labels.

Business Intelligence / Analytics

Correct data errors for more accurate analytics/modeling enabling better decisions.