Content Moderation

Find labeling errors; Decide when another review is appropriate; Discover good/bad moderators; Deploy robust ML models with 1-click.

Case StudyToxic Language Detection @ VAST-OSINT

A while back, I made a toxic language classifier. However, I was unsatisfied with the training data, […] I split the text by sentences while retaining the original label, hoping I'd be able to quickly clean-up, but that didn't work well.

I took the sentence-labeled training data and threw it at cleanlab to see how well confident learning could identify the incorrect labels. These results look amazing to me.

If nothing else, this can help identify training data to TOSS if you don't want to automate correction.

Case StudyShareChat

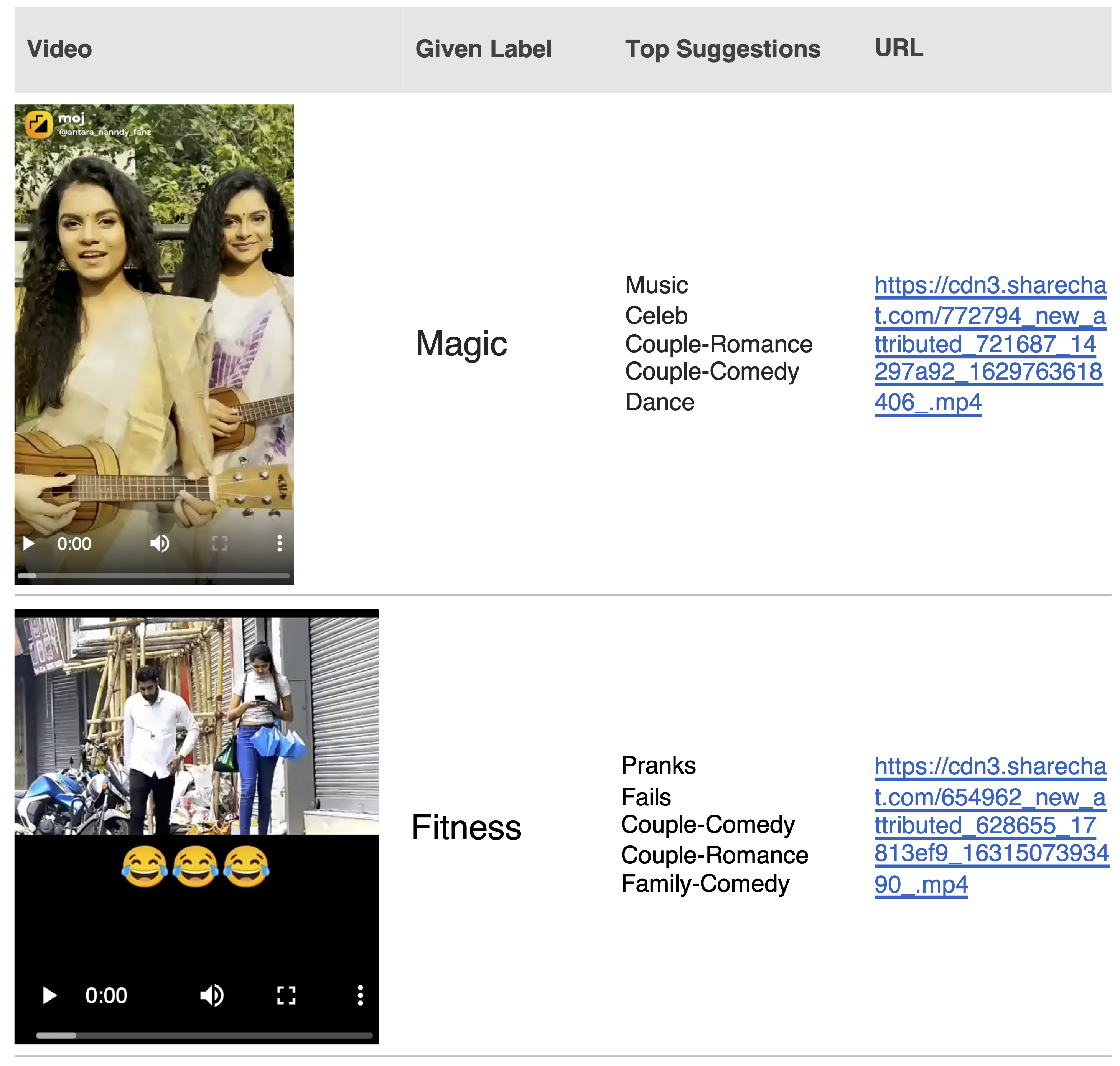

· Cleanlab automatically identified an error rate of 3% in the concept categorization process for content in the Moj video-sharing app. Shown are a couple mis-categorized examples that Cleanlab detected in the app.

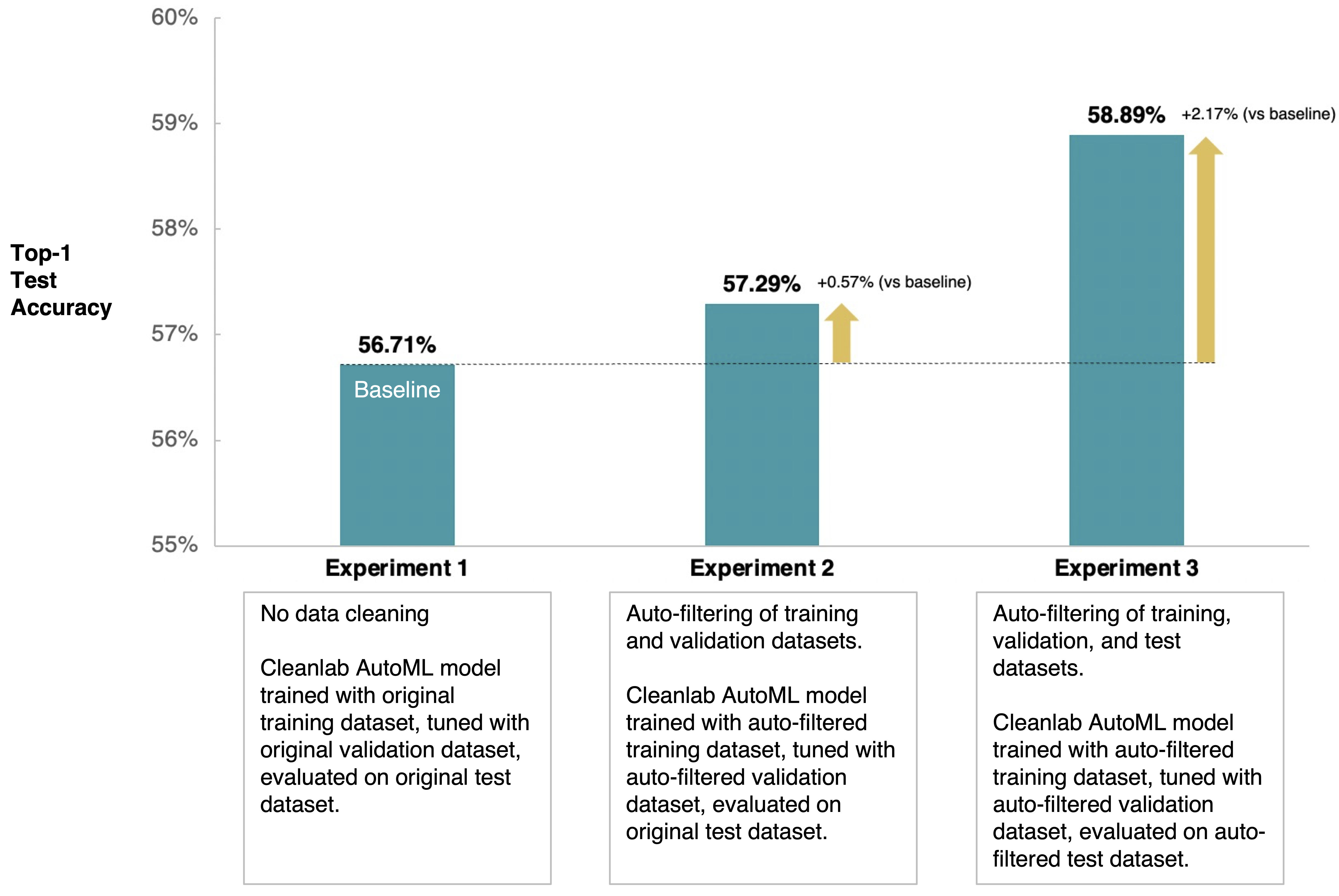

· For this dataset, Cleanlab Studio’s AutoML automatically produced a more accurate visual concept classification model (56.7% accuracy) than ShareChat’s in-house 3D ResNet model (52.6% accuracy). Auto-correcting the dataset immediately boosted Cleanlab AutoML accuracy up to 58.9% (see barchart below).

HOW CLEANLAB HELPS YOU BETTER MODERATE CONTENT

Videos on using Cleanlab Studio to find and fix incorrect values in:

Train and deploy state-of-the-art content moderation/categorization models (with well-calibrated uncertainty estimates) in 1-click. Cleanlab Studio automatically applies the most suitable Foundation/LLM models and AutoML systems for your content. Learn more

Quickly find and fix issues in a content dataset (categorization errors, outliers, ambiguous examples, near duplicates) — and then easily deploy a more reliable ML model. Read More

Determine which of your moderators is performing best/worst overall. Read More

Confidently make model-assisted moderation decisions in real-time, deciding when to flag content for human review, and when to request a second/third review (for hard examples). Read More

Read about analyzing politeness labels provided by multiple data annotators.

Read about automatic error detection for image/text tagging datasets.